Análisis estadístico de la palabra y sílabas españolas

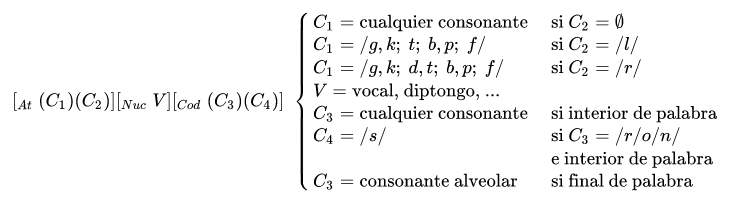

Cada lengua tiene su propia estructura silábica generalmente dividida en ataque, núcleo vocálico y coda. El núcleo vocálico suele ser el elemento imprescindible como ocurre en español que puede prescindir del ataque y coda consonánticos. Las condiciones de la estructura silábica del español son (donde C es consonante y V vocal):

El análisis estadístico explicado a continuación facilita generar sílabas y palabras españolas con mayor precisión, gracias al programa de generacíon que he desarrollado. Este programa genera texto tomando en consideración las frecuencias de cada alternativa

FUENTE: Wikipedia

Tabla de frecuencias

Toma como datos un lemario de 88449 palabras. No incluye por tanto flexión verbal, nominal, etc...

- O

- onset

- N

- nucleus

- C

- code

| syllable | syllable patterns | syllable structure | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| length | non final | final | onset | nucleus | codes | codes_final | codes_non_final | ||||||||

| 4 | 28711 | ON | 186757 | ON | 54479 | t | 35104 | a | 98986 | n | 28861 | r | 14512 | n | 23245 |

| 3 | 27670 | ONC | 47591 | ONC | 25414 | c | 33283 | o | 66754 | r | 24826 | n | 5620 | s | 12954 |

| 5 | 13137 | N | 15723 | N | 3721 | d | 25573 | e | 65633 | s | 15047 | l | 2740 | r | 10314 |

| 2 | 10796 | NC | 12552 | NC | 1856 | r | 22833 | i | 40858 | l | 7088 | s | 2093 | l | 4348 |

| 6 | 3836 | m | 19946 | u | 15006 | m | 3926 | d | 1284 | m | 3824 | ||||

| 7 | 880 | n | 17180 | io | 6618 | c | 1585 | z | 492 | c | 1553 | ||||

| 1 | 717 | l | 16722 | ia | 4624 | d | 1477 | y | 152 | y | 849 | ||||

| 8 | 120 | s | 15688 | í | 4593 | y | 1001 | m | 102 | x | 583 | ||||

| 0 | 33 | g | 10585 | ue | 3234 | z | 930 | t | 84 | p | 556 | ||||

| 9 | 12 | b | 9734 | ie | 2986 | x | 639 | x | 56 | z | 438 | ||||

| 10 | 7 | v | 6863 | ua | 1977 | p | 576 | c | 32 | b | 401 | ||||

| z | 5111 | ui | 1951 | b | 416 | j | 23 | g | 360 | ||||||

| tr | 4970 | au | 874 | g | 366 | p | 20 | ns | 343 | ||||||

| j | 4767 | ai | 516 | ns | 347 | b | 15 | d | 193 | ||||||

| ch | 4737 | ei | 366 | t | 175 | f | 13 | t | 91 | ||||||

| ll | 3850 | oi | 224 | f | 53 | k | 11 | f | 40 | ||||||

| rr | 3705 | uo | 178 | j | 27 | h | 6 | rs | 22 | ||||||

| q | 3106 | uí | 175 | rs | 22 | g | 6 | h | 14 | ||||||

| ñ | 2016 | uia | 82 | h | 20 | ns | 4 | w | 6 | ||||||

| bl | 1916 | uie | 74 | k | 14 | v | 3 | j | 4 | ||||||

| k | 154 | üi | 66 | w | 8 | w | 2 | k | 3 | ||||||

Programa en python

Los datos arriba mostrados han sido generados mediante el siguiente programa

Para segmentar las sílabas se han utilizado expresiones regulares, aunque bien podía haberse hecho más fácilmente con una gramática dependiente de contexto

import re

import collections

import itertools

def merge_data(headers, data):

"""

merges data into a table

is assumed that each cell is a length-2 tuple or list ['', '']

"""

# The itertools function zip_longest() works with uneven lists

transposed_table = list(itertools.zip_longest(*data, fillvalue=['', '']))

firstrow = list(itertools.chain.from_iterable(transposed_table[0]))

assert (len(headers) == len(firstrow)), "lists are of different size!"

result = [headers]

for i in transposed_table:

# print(list(itertools.chain.from_iterable(i)))

result.append(list(itertools.chain.from_iterable(i))) # flattens first list level

return result

# lemario DRAE tomado de página no oficial. A 2010-10-17 eran 88449 palabras

with open('./lemario.txt', 'r') as f:

raw_text = f.read() # readlines()

# remove 'tildes' and uppercase letters

text = raw_text.lower().replace("á", "a").replace("é", "e").replace("í", "í").replace("ó", "o").replace("ú", "u")

# REGEX SECTION

r_syllableES = r"(?:ch|ll|rr|qu|[mnñvzsyjhxw]|[fpbtdkgc][lr]?|[lr])?" \

r"(?:" \

r"[iuü][eaoéáó][iyu]" \

r"|[aá]h?[uú][aá]" \

r"|[iuü]h?[eaoéáó]" \

r"|[eaoéáó]h?[iyu]" \

r"|[ií]h?[uú]" \

r"|[uúü]h?[iíy]" \

r"|[ieaouíéáóúü]" \

r")" \

r"(?:(?:" \

r"(?:(?:n|m|r(?!r))s?(?![ieaouíéáóúü]))" \

r"|(?:(?:[mnñvzsyjhxw]|l(?!l))(?![ieaouíéáóúü]))" \

r"|(?:(?:[fpbtdkg]|c(?!h))(?![lr]?[ieaouíéáóúü]))" \

r"))?"

# append one of this to regex above

final = r'$'

non_final = r'(?!$)'

# O N C

r_onset = "^[^aeiouáéíóúü]+"

r_nucleus = "[aeiouáéíóúü]+"

r_code = "[^aeiouáéíóúü]+$"

# hoy many SYLLABLES? SYLLABLES length

words_syllable_length = []

words = text.split("\n")

for i in words:

syllables = re.findall(r_syllableES, i)

words_syllable_length.append(len(syllables))

# print(i, len(syllables))

words_syllable_length_freq = collections.Counter(words_syllable_length).items()

# EXTRACT SYLLABLES & final / non final

syllables = re.findall(r_syllableES, text, re.MULTILINE)

syllables_final = re.findall(r_syllableES + final, text, re.MULTILINE)

syllables_non_final = re.findall(r_syllableES + non_final, text, re.MULTILINE)

# SYLLABLE STRUCTURE

# ON ONC N NC & final / non final

# final

syllable_structure_final = []

for i in syllables_final:

if re.search(r_onset, i):

if re.search(r_code, i):

syllable_structure_final.append('ONC')

else:

syllable_structure_final.append('ON')

else:

if re.search(r_code, i):

syllable_structure_final.append('NC')

else:

syllable_structure_final.append('N')

syllable_structure_final_freq = collections.Counter(syllable_structure_final).items()

# non final

syllable_structure_non_final = []

for i in syllables_non_final:

if re.search(r_onset, i):

if re.search(r_code, i):

syllable_structure_non_final.append('ONC')

else:

syllable_structure_non_final.append('ON')

else:

if re.search(r_code, i):

syllable_structure_non_final.append('NC')

else:

syllable_structure_non_final.append('N')

syllable_structure_non_final_freq = collections.Counter(syllable_structure_non_final).items()

new_text = "\n".join(syllables)

# ONSETS

onsets = re.findall(r_onset, new_text, re.MULTILINE)

# NUCLEUS

nucleus = re.findall(r_nucleus, new_text, re.MULTILINE)

# CODES

codes = re.findall(r_code, new_text, re.MULTILINE)

codes_final = re.findall(r_code, "\n".join(syllables_final), re.MULTILINE)

codes_non_final = re.findall(r_code, "\n".join(syllables_non_final), re.MULTILINE)

# collections.Counter creates a frequency dictionary

# items() convert a dictionary into a list of tuples

onsets_freq = collections.Counter(onsets).items()

nucleus_freq = collections.Counter(nucleus).items()

codes_freq = collections.Counter(codes).items()

codes_final_freq = collections.Counter(codes_final).items()

codes_non_final_freq = collections.Counter(codes_non_final).items()

# prepare table output

table_temp = [words_syllable_length_freq, syllable_structure_non_final_freq, syllable_structure_final_freq,

onsets_freq, nucleus_freq, codes_freq, codes_final_freq, codes_non_final_freq]

headings = ["words syllable length", "f", "syllable structure non final", "f", "syllable structure final", "f",

"onset", "f", "nucleus", "f", "codes", "f", "codes_final", "f", "codes_non_final", "f"]

table = merge_data(headings, table_temp)

# export table to a 'tab separated values' file

with open('./output.tsv', 'w') as f:

for row in table:

f.write("\t".join(str(x) for x in row) + '\n')